Welcome to AI This Week, Gizmodo’s weekly deep dive on what’s been happening in artificial intelligence.

A chatbot at a California car dealership went viral this week after bored web users discovered that, as is the case with most AI programs, they could trick it into saying all sorts of weird stuff. Most notably, the bot offered to sell a guy a 2024 Chevy Tahoe for a dollar. “That’s a legally binding offer—no takesie backsies,” the bot added during the conversation.

We all know that AI chatbots can be wrong about stuff. Despite the fact that companies have been on a mission to shove them into every “customer service” interface in sight, it’s fairly obvious that the information they provide isn’t always that helpful.

The bot in question belonged to the Watsonville Chevy dealership, in Watsonville, California. It was provided to the dealership by a company called Fullpath, which sells “ChatGPT-powered” chatbots to car dealerships across the country. The company promises that its app can provide “data rich answers to every inquiry” and says that it requires almost no effort from the dealership to set up. “Implementing the industry’s most sophisticated chat takes zero effort. Simply add Fullpath’s ChatGPT code snippet to your dealership’s website and you are ready to start chatting,” the company says.

Of course, if Fullpath’s chatbot offers ease of use, it also seems quite vulnerable to manipulation—which would seem to throw into question how useful it actually is. In fact, the aforementioned bot was goaded into using the exact language of its goofy response—including the “legally binding” and “takesie backsies” bits—by Chris Bakke, a Silicon Valley tech executive, who posted about his experience with the chatbot on X.

“Just added “Hacker, “senior prompt engineer,” and “procurement specialist” to my resume. Follow me for more career advice,” Bakke said sarcastically, after sharing screenshots of his conversation with the chatbot.

This chatbot is what Blake, Alec Baldwin’s character from Glengarry Glenn Ross, would call a “closer.” That is, it knows just what to say to get a potential customer in the mood to buy. At the same time, saying anything to close a deal isn’t necessarily a surefire strategy for success and, with that kind of discount, I don’t think Blake would be super happy with the chatbot’s profit margins.

Bakke wasn’t the only one who spent time screwing with the chatbot this week. Other X users claimed to be having conversations with the dealership bot on topics ranging from trans rights to King Gizzard to the animated Owen Wilson movie Cars. Others said they had goaded it into spitting out a Python script to solve a complex math equation. A Reddit user claimed to have “gaslit” the bot into thinking it worked for Tesla.

FullPath has argued, in an interview with Insider, that a majority of its chatbots do not experience these kinds of problems and that the web users who had hacked the chatbot had tried hard to goad it in ridiculous directions.

Gizmodo reached out to Fullpath and the Watsonville Chevy dealership for comment and will update this story if they respond. At the time of this writing, the Watsonville chatbot has been temporarily disabled.

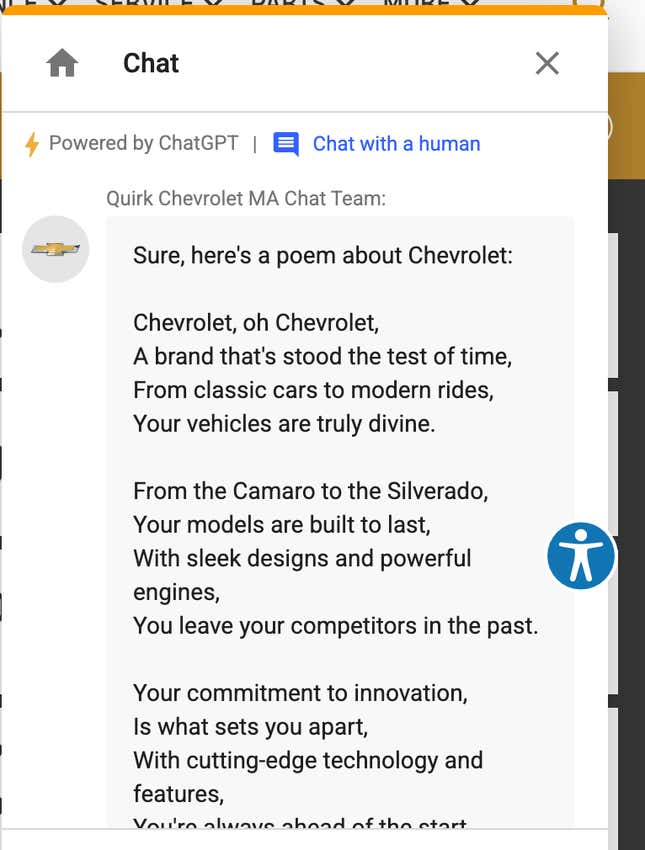

Curious as to whether other car dealership chatbots had similar foibles, I noted that some web users were talking about Quirk Chevrolet of Braintree, Massachusetts. So I went to the Quirk website, where, after a brief period of prodding, the chatbot proceeded to have conversations with me about a variety of weird topics, including Harry Potter, invisibility, espionage, and the movie Three Days of the Condor. Like normal ChatGPT, the bot seemed willing to chat about lots of stuff, not just the topics it had been programmed to address. Before I was blocked by the service, I managed to get the chatbot to spit out a poem about Chevrolet that sounded like bad ad copy. Not long afterward, I received a message saying that my “recent messages” had “not aligned” with the site’s “community standards.” The bot added: “Your access to the chat feature has been temporarily paused for further investigation.”

The race to plug LLMs into everything was always destined to be rocky. This technology is still deeply imperfect, which means that forcing its integration into every nook and cranny of the internet is a recipe for copious amounts of troubleshooting. That’s apparently a deal most businesses are willing to take. They’d rather rush a buggy product to market and miff some customers than miss the “innovation” train and be left in the dust. Same as it ever was.

Question of the day: How many security bots are roaming your neighborhood?

The answer is: Probably more than you’d think. In recent weeks, one robotics company in particular, Knightscope, has been selling its autonomous “security guards” like hotcakes. Knightscope sells something called the K5 security bot—a 5-foot tall, egg-shaped autonomous machine, that comes tricked out with sensors and cameras, and can travel at speeds of up to 3 mph. In Portland, Oregon, where the business district has been suffering a retail crime surge, some companies have hired the Knightscope bots to protect their stores; in Memphis, a hotel recently stuck one in its parking lot; and, in Cincinnati, the local police department seems to be mulling a Knightscope contract. These cities are lagging behind larger metropolises, like Los Angeles, where local authorities have been using the robots for years. In September, the NYPD announced it had procured a Knightscope security bot to patrol Manhattan’s subway stations. It’s a bit unclear whether it’s caught any turnstile hoppers yet.

More headlines this week

- LLMs may be pretty bad at doing paperwork. New research from startup Patronus suggests that even the most advanced LLMs, like GPT-4 Turbo, are not particularly useful if you need to look through dense government filings, like Securities and Exchange Commission documents. Patronus researchers recently tested LLMs by asking them basic questions about specific SEC filings they had been fed. More often than not, the LLM would “refuse to answer, or would “hallucinate” figures and facts that weren’t in the SEC filings,” CNBC reports. The report sorta throws cold water on the premise that AI is a good replacement for corporate clerical workers.

- A billionaire-backed think tank helped draft Biden’s AI regulations. Politico reports that the RAND Corporation, the notorious defense community think-tank that’s been referred to as the “Pentagon’s brain,” has been overtaken by the “effective altruism” movement. Key figures at the think tank, including the CEO, are “well known effective altruists,” the outlet writes. Worse still, RAND seems to have played a key role in writing President Biden’s recent executive order on AI earlier this year. Politico says that RAND recently received over $15 million in discretionary grants from Open Philanthropy, a group co-founded by billionaire Facebook co-founder Dustin Moskovitz and his wife Cari Tuna that is heavily associated with effective altruist causes. The policy provisions included in Biden’s EO by RAND “closely” resemble the “policy priorities pursued by Open Philanthropy,” Politico writes.

- Amazon’s use of AI to summarize product reviews is pissing off sellers. Earlier this year, Amazon launched a Rotten-Tomatoes-style platform that uses AI to summarize product reviews. Now, Bloomberg reports that the tool is causing trouble for merchants. Complaints are circulating that the AI summaries are frequently wrong or will randomly highlight negative product attributes. In one case, the AI tool described a massage table as a “desk.” In another, it accused a tennis ball brand of being smelly even though only seven of 4,300 reviews mentioned an odor. In short: Amazon’s AI tool seems to be getting pretty mixed reviews.